I want to create a prototype app for bundling support on both server and the client. The idea behind the bundling is to have one PHP process handling creation/update of the files on the server, instead of separate PUT requests for each file.

I am using a word prototype, since I wanted to veryify the design with extended smashbox framework which is also now in prototype phase.

Here are some specifications:

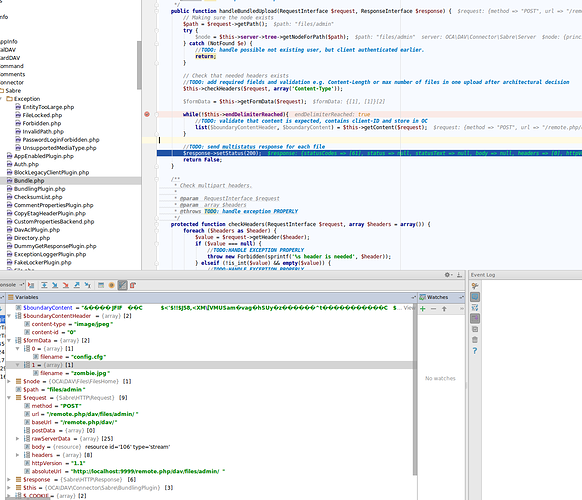

- POST request coming from client side will trigger one PHP process, which will handle bundle processing

- Bundle is a non-compressed ZIP file coming from various locations on the client size and associated with unique IDs

- POST request contains webdav details about each of the files associated with unique IDs mapping. These mapping is required to decide about an end location of the file.

- Upon receiving of the POST request, server will extract each file to its required location.

- These feature could be parallel or outdated in the future with other features like HTTP2 or other wevdav extensions MPUT if they occur to be better performing. It could also happen that HTTP2 could be another improvement to bundling.

Unknowns:

* where is the proper location in the server code to implement it, not to break the compatibility with older clients.

* Some implications on the server side preventing implementation

Please share your thoughts.

Piotr

CURRENT:

* For now, no zip, multipart with json containing metadata and files